ChatGPT, Google, and Meta Want to Own Your Next Trip

Skift Take

Generative artificial intelligence has made a big leap in the past few weeks, some of the most significant advancements since it came on the scene 18 months ago.

OpenAI, Google, and Meta each released updated AI models this spring. And they all showed how they envision their chatbots as personal assistants that can understand text, video, photos, and audio.

Each of them used travel-related examples to show how they want users to adopt those assistants — potentially undermining travel companies releasing their own products built on top of tech from OpenAI and Google.

This topic will be part of the discussion during Skift's inaugural Data and AI Summit on June 4 in New York City. Speakers like Shane O’Flaherty of Microsoft and trip planning startup expert Gilad Berenstein will discuss how the industry is adopting AI — and how it's not.

Some initial testing shows that OpenAI’s latest generative AI model still produces factual errors (which it acknowledges), as is the case with every other model.

But the point is that these companies are pushing forward, and the future high-tech digital travel concierge is a little bit closer.

Below are detailed examples and analysis of how these AI chatbots — ChatGPT, Gemini, and Meta AI — are becoming better translators, tour guides, and trip planners.

Skift has tested some aspects, but not all of them are available yet.

Voice Translation

OpenAI is releasing new voice translation capabilities in the coming weeks that could unlock new destinations for international travelers.

That’s because the ChatGPT mobile app’s robotic voice — which is uncannily human-like — will act as a translator, according to demos.

Mobile users have been able to have voice conversations with ChatGPT since 2023, but what exists today is simple compared to what OpenAI comes next.

The new voicebot will be able to understand non-verbal cues like exhalations and tone of voice, pausing to listen when interrupted, and it can recognize different voices in group conversations.

OpenAI also says that it can change its tone (such as speaking more excitedly or sarcastically), sing, and laugh. It appears that it can also speak languages in proper accents, according to the demos, while the existing voices seem to have American accents at all times.

This could break down language barriers that may keep travelers from visiting certain destinations.

Existing text translators, like Google Translate, are severely limited in their abilities. Clunkiness aside, they struggle to translate even between common languages, typically misunderstanding slang and idioms. For uncommon languages, they’re almost useless.

The ChatGPT voicebot sounds human-like today but operates essentially by hearing the voice, turning it into text, translating that text, and then reading the translation aloud — multiple steps that slow it down and can lead to glitches.

The upcoming version is voice-only, which OpenAI showed makes its responses instant, as if speaking with a human.

In a brief Skift test, the existing chatbot was able to understand and translate clips in Hungarian, Catalan, and Haitian Creole — three relatively uncommon languages. It was also able to understand Mexican slang, explain what it means, and provide context about when it's appropriate to use. And ChatGPT's language capabilities are getting even stronger, OpenAI said.

OpenAI published a blog post with multiple demos showing how it works. Near the bottom of the same post, it also showed where the chatbot can run into problems.

Google said it is releasing similar voice capabilities for Gemini this summer, though it has not demonstrated the tech yet.

Visual Translation

OpenAI and Meta are all pushing their tech's visual translation capabilities, as well. They both used the example of translating a menu.

This type of tool can be a big problem-solver when a restaurant does not have a menu in the traveler’s native language. Even for people who speak another language fairly well, deciphering menus can be difficult because they often contain words not used in everyday speech.

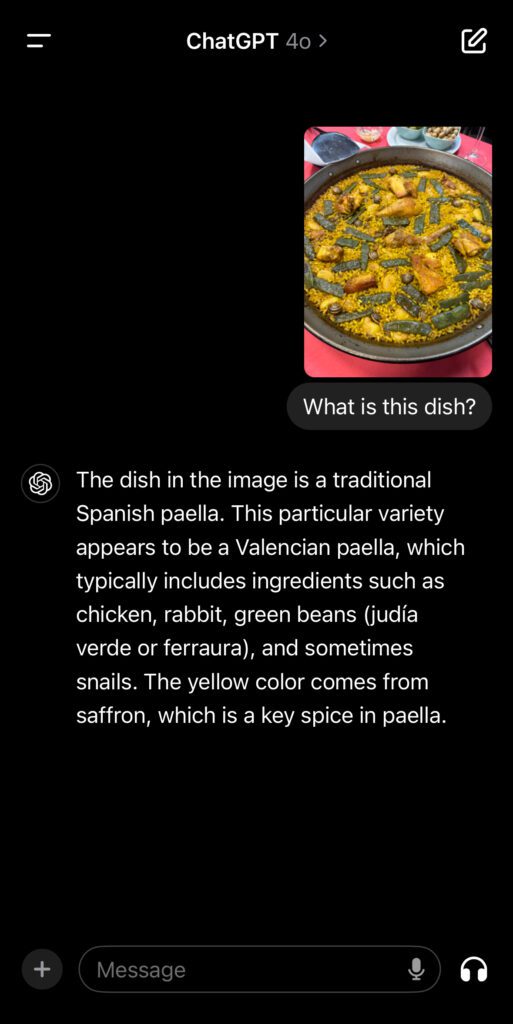

OpenAI said in a blog post that a user can now take a photo of a menu and ask ChatGPT to translate it, as well as provide cultural information about a dish and make recommendations about what to order.

Skift did a brief test on the ChatGPT mobile app, and the translation works fairly well — not perfectly — for photos and PDF files. And the user can follow up with questions about unfamiliar dishes or ingredients.

Meta highlighted that capability, too, during a recent announcement for the latest version of its Ray-Ban Meta smart glasses — though Skift has not tested them yet. If the user is traveling in France and needs help translating a menu, the glasses can show the translated text without the need to look at a phone screen, according to Meta.

Tour Guide

OpenAI, Google, and Meta all have said that their chatbots will be able to see and listen to their surroundings in real time. That could essentially turn them into virtual tour guides.

“Our vision for the Gemini app is to be the most helpful personal AI assistant by giving you direct access to Google’s latest AI models,” Sissie Hsiao, vice president of Google and general manager for Gemini experiences, said during a conference last week.

Meta announced recently that it has ingerated the latest Meta AI model into the Ray-Ban Meta smart glasses. Mark Zuckerberg even described the glasses as an “AI assistant” during an earnings call last month: “You can let them see what you see and hear what you hear. So they have full context on what’s going on around you as they help you with whatever you’re trying to do.”

Tech influencer Cleo Abram showed how they can act as a guide in New York City through a paid partnership with Meta and Ray-Ban.

“They’re doing all this through Meta AI,” Abram said in the ad. “When I say, ‘look,’ it’s taking a picture, analyzing it, and then using a large language model to give me an answer.”

A prompt she gave while looking through the glasses at the Washington Monument: “Hey, Meta: Look and tell me, what’s the history of that monument?”

The chatbot’s voiced response: “It was built to commemorate the centennial of George Washington’s inauguration as president.”

Another question: “Hey, Meta: Look and tell me, what church is that?”

The response: “The name of this building is Washington Square Methodist Episcopal Church.”

ChatGPT and Gemini won't be able to read live video until later this year, but they can look at photos.

A Skift test showed that ChatGPT does pretty well. It’s not all-knowing, but it can use context to make good guesses.

A photo of the atrium inside the Orlando International Airport led to this response: “This image appears to be taken inside an atrium of a large building, likely an airport terminal or a hotel. The presence of palm trees, a fountain, and multiple levels with balconies suggests it might be the Orlando International Airport in Florida, USA. The architecture and indoor landscaping are distinctive features of this location.”

And here is ChatGPT giving context about paella from Valencia, which it accurately described as having rabbit, chicken, a type of green beans, and snails.

Gemini, on the other hand, doesn’t do so well yet with recognizing photos. When asked about the same photo, this was the response: “Sorry, I can’t help with images of people yet.”

Reimagined Search and Trip Planning

Google last week showed three concrete examples of how it’s investing in AI-powered trip planning, both through Gemini and the traditional search bar.

It was a substantial move compared to its competitors, showing Google is serious about helping customers plan trips, not just book them.

The trip planning capability for the paid Gemini Advanced platform hasn’t been released yet, but Skift has already been testing how the reimagined search bar works. It can be a bit finicky, but it’s a clear glimpse at how Google’s search results will look going forward: AI-generated summaries at the top and throughout, plus some lists and videos in the middle, with fewer traditional links interspersed.

Meta is also pushing its upgraded chatbot’s trip planning capabilities. The Meta AI chatbot can now answer travel questions within group chats in Messenger, Whatsapp, and Instagram. So, if a group is planning a trip together on Whatsapp, someone could ask Meta AI to suggest things to do in a new city or share info on flight availability.

Since generative AI was first released in 2022, travel experts have predicted that Google and other large companies would be the biggest winners, and that small hotels and tour operators could suffer the most as their websites are pushed further down the page.

For travel companies with low internet traffic, like destination marketing organizations, newly reimagined search could be the nail in the coffin unless they rethink how to operate.

The Conclusion

OpenAI is the furthest along in creating a virtual personal assistant that can act as a tour guide.

Google is furthest along in creating useful trip planning tools that are integrated with booking options.

Meta’s glasses offer an interesting digital tour guide option, but the fact that they are glasses limits what users can do with them. And since Meta AI through Whatsapp and other apps can’t look at photos yet, the chatbot is furthest behind in terms of being an all-around useful assistant.